Understand Your Memory Constraints

Embedded systems aren’t built for luxury. Unlike general purpose computers, they’re tight on space and resources. So, knowing the difference between RAM and flash isn’t optional it’s foundational. RAM is volatile and crucial for runtime performance: stacks, heaps, buffers. It’s fast but eats power, and when your device reboots, all of it’s gone. Flash, on the other hand, is non volatile. It’s long term storage think firmware, static configs, lookup tables. But it comes with tradeoffs. Writes are slower, and flash wears out over time.

Low power microcontrollers (MCUs) introduce extra constraints. They often have kilobytes not megabytes of RAM, and limited flash endurance. That means your system design must be lean. Do more with less: pre process data before buffering in RAM, minimize in place writes, and allocate carefully.

Storage needs vary with the product’s life cycle. If your firmware rarely updates and your logs are minimal, then a simple static map might work. But if your system requires periodic updates, dynamic configuration, or continuous logging, you’ll need a smarter layout: maybe dual flash segments for OTA updates, or a circular buffer for runtime logs.

Whatever your scenario, think ahead. Map your memory strategy to the device’s purpose and pace of change. Storage isn’t just about saving space it’s about staying operational.

Choose the Right File System

When it comes to embedded systems, your file system isn’t just back end plumbing it directly affects reliability, speed, and how long your flash survives. The lightweights in this space are LittleFS, SPIFFS, and good old FAT (typically used with SD cards). Each has its own angle.

LittleFS is the new favorite in many minimalist setups. It’s designed for embedded flash with wear leveling and power loss resilience baked in. Think of it as low overhead with a long term mindset. SPIFFS is older, simpler, and takes up less flash, but it doesn’t support directories and it lacks native wear leveling. Still, it’s fast and works well for small configs where simplicity beats robustness.

FAT comes into play mainly when you’re using SD storage or external readers. Cross platform compatibility is the win here, but it brings overhead and isn’t ideal for wear heavy environments unless you add your own caution flags.

You’ll also want to think about journaling. Journaling systems help maintain data integrity, especially during unexpected resets or power loss, but they increase write cycles an issue for flash lifespan. Non journaling systems may be faster and lighter on the flash, but one glitch and your data could get corrupted.

Bottom line: pick what matches your storage medium, access needs, and longevity goals. There’s no silver bullet just trade offs you have to own early on.

Data Compression Techniques

Compression is your first line of defense when storage is tight. Lossless methods like LZ4 and gzip allow you to shrink files without sacrificing a single byte of data. They’re fast, well supported, and strike a decent balance between space savings and performance key for embedded systems.

When to compress? Focus on what doesn’t need to change in real time. Static assets, firmware packages, and logs are prime candidates. Firmware updates can be compressed before transmission and decompressed in memory during install. Logs can be zipped after collection if bandwidth or flash availability is limited.

But it’s not just about squeezing things smaller. You need to weigh CPU and memory usage during compression/decompression. Lighter algorithms like LZ4 offer speed with modest compression ratios, while gzip gets you better savings at the cost of more CPU cycles. Compress too aggressively and you’ll slow down access times or put undue stress on a low power processor.

The goal? Optimize for the long haul. Compress what benefits most, leave hot path data alone, and test access latency against real world use. Done right, compression adds capability without adding cost.

Minimize Write Cycles to Extend Flash Life

Flash memory wears out. That’s a hard truth in embedded systems design, and ignoring it shortens your product lifespan. The biggest culprit? Excessive write operations. You need to be strategic.

Start with caching. Smart caching avoids hammering the same memory blocks. Buffer small changes in RAM and write them out in batches rather than immediately. This lowers write frequency and helps manage power in low energy setups.

Batching operations is next. Rather than writing each individual sensor reading or config change, queue them. Dump the whole set when it hits a threshold or timeout. It’s simple and extends your flash life significantly.

Finally, don’t skip wear leveling, especially when dealing with NOR or NAND flash. It spreads out writes across memory sectors, preventing hotspots from burning out early. Some file systems handle this internally (LittleFS is a decent choice), but be sure you understand how your storage layer works.

If your firmware’s constantly writing, your flash won’t last. Optimize now, or pay later with corrupted data and early failures.

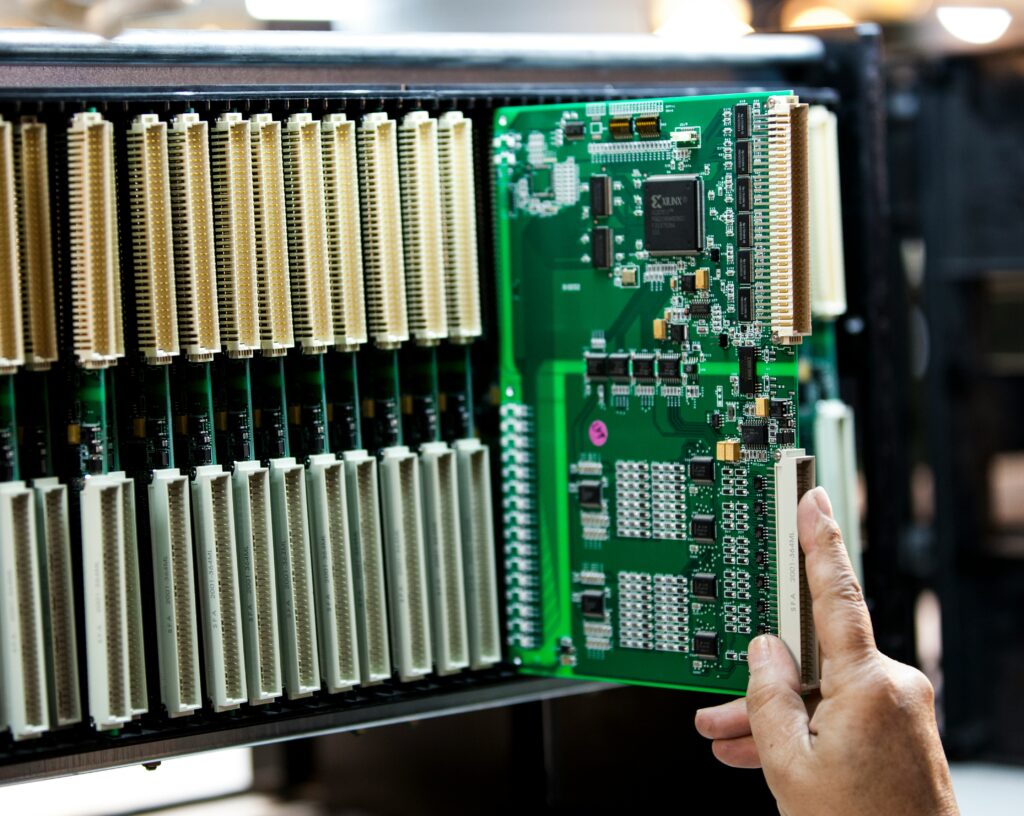

Leverage Smart Partitioning

If your embedded system is storing everything in one place, stop. And partition smarter. At the very least, separate firmware code, configuration files, and runtime data into distinct memory regions. Why? Because updates fail, users mess with settings, and runtime logs pile up. Mixing everything heightens the risk of corruption and makes recovery a nightmare.

Redundant partitions are another must have if you’re supporting OTA (over the air) updates. One active, one backup. If that shiny new firmware bricks mid update, the system can safely roll back. No tech support call necessary.

Then there’s security. Physically isolating sensitive data (e.g., encryption keys, credentials, proprietary logic) makes it harder to tamper with. Compartmentalization doesn’t just protect data it limits the damage when things go sideways. Think of it less as an optimization tactic and more like architectural insurance. Clean. Predictable. Less likely to blow up later.

Efficient Logging Strategies

Logging is essential, but storage is limited. That means every log byte needs to earn its place. Instead of letting logs pile up until memory becomes a problem, use ring buffers or circular logs. These structures recycle space automatically, keeping only the most recent, relevant entries without running out of room.

Next: don’t log everything log smart. Not every event deserves a log entry. Apply filters at the firmware or application level to capture only high value events. That might mean tracking only failures, key milestones, or system anomalies. Less noise equals faster diagnosis and more efficient storage usage.

Finally, consider offloading your logs. During maintenance windows or scheduled check ins, push logs out to an external device or cloud endpoint. This both clears space and preserves long term records without needing constant connection. Embedded systems perform better when logging is lean, targeted, and temporary.

Protocol Efficiency and Data Flow

In embedded systems, efficiency isn’t a luxury it’s survival. Bulky protocols and verbose data formats eat power, waste memory, and slow everything down. Cutting that overhead with compact, binary communication protocols like CBOR, Protobuf, or MessagePack is a no brainer. They strip out the fluff and keep only what’s essential.

Even better, delta updates take that minimalism further. Instead of pushing complete data sets or firmware versions every time, you’re only syncing what’s changed. Save bandwidth, reduce write cycles, and speed up updates all wins in a resource constrained environment.

Smart communication is smart storage. Less transferred means less stored, managed, and processed on the device. For more on how this plays out in real world systems, check out Low Latency Communication Protocols for Smart Devices.

Design Time Decisions That Pay Off Later

When working with tight storage budgets, the easiest wins often come early during the design phase. Start by pulling in memory analyzers and estimators. These tools help you get a grip on what your build is actually consuming, not just what the spec sheets say. Don’t guess. Measure.

Next, think ahead: build in debugging hooks, but do it smart. Avoid heavy footprint logging or massive trace buffers baked into the final image. Go lightweight conditional logging, flags that activate on demand, or memory mapped diagnostics you can toggle remotely. The trick is to support field level troubleshooting without dragging the whole system down.

Finally, once your code is in the wild, don’t assume your design assumptions were correct. Profile real world usage. Watch how your buffers behave under edge cases, look at read/write ratios, track actual file sizes over time. What your system does in the lab is rarely what it does in the field. Validate early. Adjust often.

Make Optimization a Continuous Practice

Storage tuning isn’t something you check off once and walk away from. Firmware grows. Features evolve. What worked last quarter might bottleneck you six months from now. Make it standard to review memory use with each firmware milestone not just for bugs, but to catch creeping bloat early.

Automate what you can. Integrate storage footprint checks into CI/CD pipelines so regressions get spotted before they ship. Simple scripts can track partition usage, write rates, and file system integrity across builds. It’s not flashy, but it saves you from surprises when your update bricks devices in the field.

Looking ahead, specs and protocols are going to shift 2026 will bring new flash standards, tighter edge constraints, and changes in runtime expectations. Teams that build in modularity and leave room to pivot will handle it smoothly. The rest will scramble.

Ongoing optimization isn’t optional. It’s how embedded systems stay lean, functional, and field ready in a world that refuses to stand still.